Touch navigation for medical 3D imaging

The goal of the present paper was to extend the Lemur framework developed by the Institute of Medicinal and Analytical Technology, so it can be used for 3D navigation by gestures performed on a multi-touch screen.

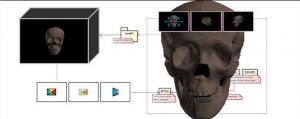

For the elaboration of the gesture concepts, a list of possible actions was created. After the actions were chosen, gestures for them were designed. Those gestures were designed to be as natural as possible. The implementation was carried out in three steps. In the first step, an example environment using Java3D was written, which allowed to move the camera around an object (a simple cube at the beginning, later on a model of a skull). In the second step, the basic structure of the “Gestures” application was developed, aided by a simulator that generates multi-touch events. In the third and last step, the multi-touch screen was integrated. For the transmission of multitouch events, the Cornerstone Framework supplied by the manufacturer of the multitouch screen uses the TUIO protocol. This is why the accordant library, which represents the reference implementation for the client, was used. The result is a 3D navigation for medical images, which implements the most important gestures.